Top Skills Used

- ROS, Gazebo

- Machine learning

- Computer vision

- Python data pipelines

- Data augmentation

Project Overview

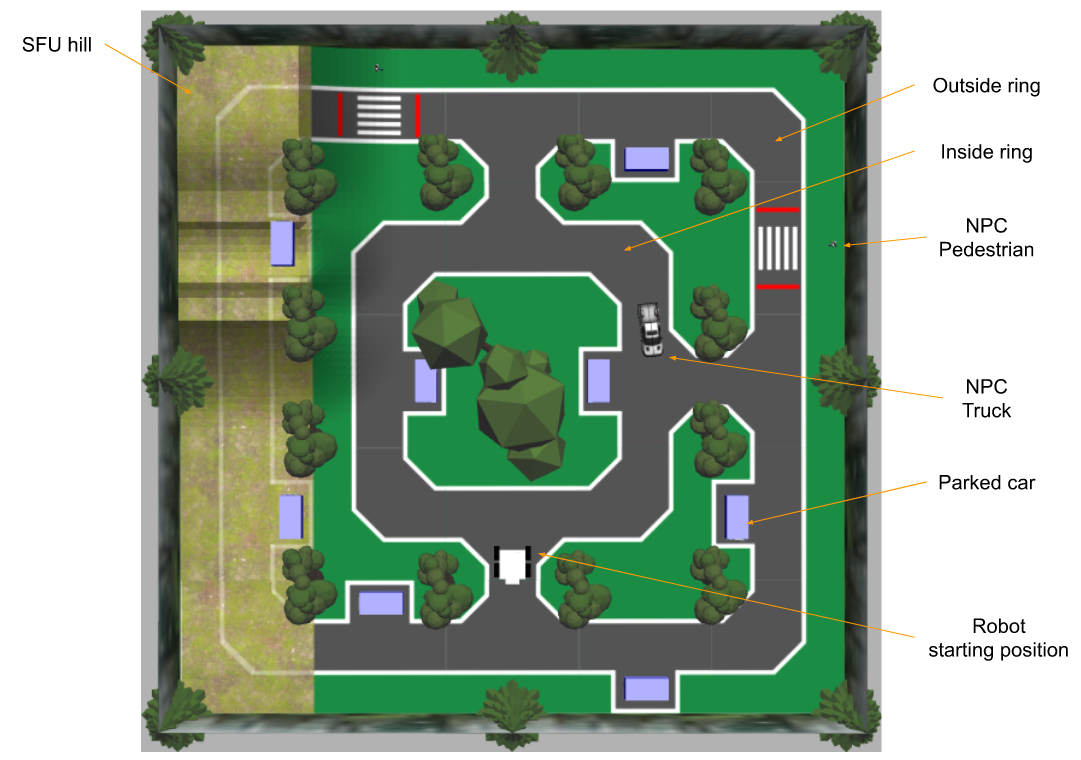

As part of a machine learning course (ENPH 353), I worked in a team of two to train a virtual autonomous agent to navigate a 3D simulated obstacle course. The goal was to drive around the virtual environment, identify parked cars, and accurately read their licence plates using computer vision — all completely autonomously. Our code repository for this project is available on my GitHub.

The simulation was set up in Gazebo Gym and included parked cars along the roadside, pedestrians crossing randomly, and a moving NPC truck. Our objective was to complete the course as quickly as possible while correctly reading all eight randomly generated plates. Points were taken away for driving off the road or colliding with obstacles, so precise navigation and perception were crucial.

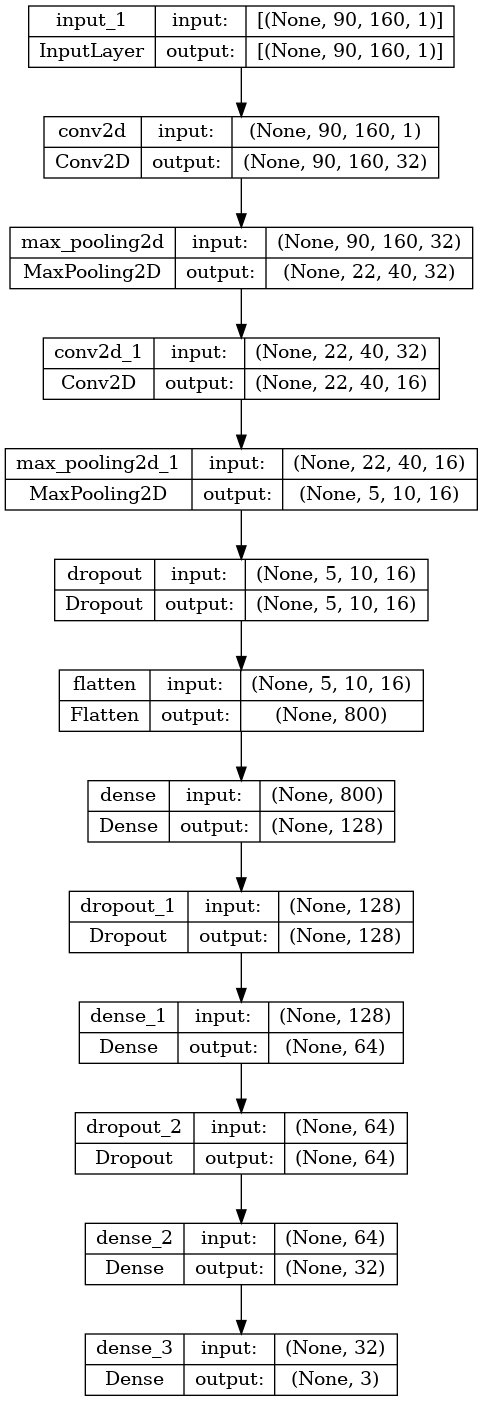

To achieve autonomous driving, we employed imitation learning. First, we controlled the agent manually using ROS, collecting thousands of images from the agent’s cameras alongside our control inputs (turn left, turn right, or go straight). This dataset was then used to train a convolutional neural network that predicted steering commands based on the camera input. Our inspiration came from how Tesla trains its autonomous driving systems.

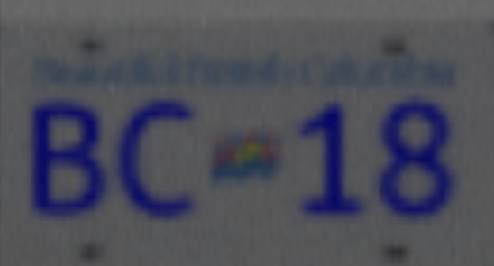

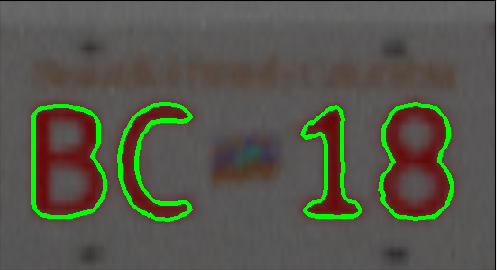

We also used computer vision techniques to detect and read the car plates. After identifying the plates, we used another neural network trained on augmented data to recognize the characters. This was challenging due to the blurriness of the plate images. To improve accuracy, we averaged results across multiple frames of the same plate.

In the end, our efforts paid off: we correctly identified all car plates during the competition. While our agent wasn’t the quickest to navigate the obstacle course, my partner and I were very happy with our precision and consistency in reading the plates. This project inspired me to study machine learning further. In the following semester, I became a teaching assistant for this course and eventually took part in a research project on machine learning applications in lossy image compression.